Understanding potential pitfalls in migration from Fivetran

A short story on how we tried to cut the EL(T) costs at Osome and the aftermath.

Context

I’ve joined Osome 7 months ago as a VP Analytics. At my previous company Export and Load was managed by handwritten Airflow DAGs and supported by 5+ Data Engineers.

Here at Osome we only have 1 Data Engineer who supports all the infra.

We use modern data stack: Fivetran, Google Big Query, dbt, and Looker.

In 2022 we’ve paid around 400k$ per year for our data stack.

One of my goals for 2023 was to decrease the data team spend.

A bit info about a Fivetran

Fivetran is a data movement platform. All it does is pull the data from sources and upload them to the destination. There is some set of customizations available for a user like column blocking and hashing, but we don’t use them a lot.

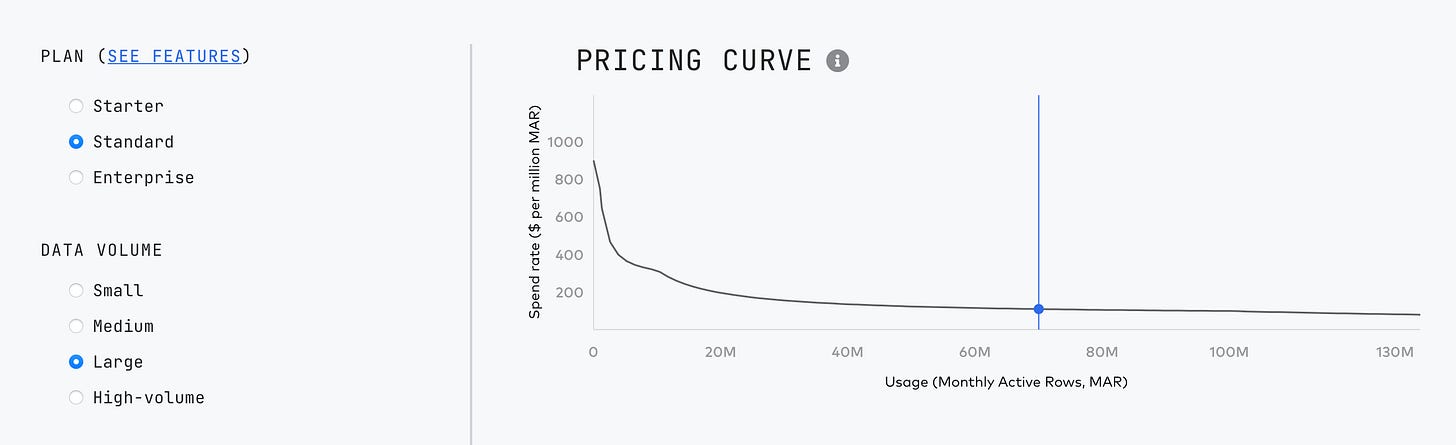

It prices the usage based on the MAR (monthly active rows) concept, basically you pay for each updated row. Their pricing is logarithm based, which means that you pay less for every new row (or vice versa, with 30% decrease in the usage we’ll pay only 15% less)

The biggest advantage of Fivetran is that the code is pre-written for you and there is no need to track API changes, debug and even monitor runs. In fact, we’ve never really opened Fivetran dashboard, everything was running smoothly in the background and we were happy about it.

We had our Fivetran renewal in the end of April and our original plan was to negotiate a better deal (preferably half the cost for the same usage) and keep Fivetran as a main tool for EL.

Data engineering analysis

As I’ve already mentioned, one of the goals for 2023 was to decrease the data team spend. 95% of our data spend (excluding the team) is in:

Google Big Query (pay as you go, per TB)

Looker (annual commitment, per user)

Fivetran (annual commitment, per row synced)

In this article I’ll focus on Fivetran and our attempt to cut Fivetran cost. We had annual contract with renewal date in the end of April.

Fivetran renewal team approached us in the end of February (2 months before the end of current contract) to negotiate a contract for the new year. I’ve requested an export of our current usage split by sources to analyse the usage.

Surprisingly, 80% of our usage was Postgres. We’ve payed a lot for a CDC mechanism. The major issue was that the usage was unevenly distributed across different months with more than 70% variation. This was mostly due to the migrations which happened on the source tables and Fivetran had to run full refresh for the tables affected. And since they charge you for each updated row it cost us a significant amount of money.

Alternatives

The initial idea was to compare Fivetran with alternatives and make a decision. We had a list of criteria for the EL(T) tool:

Easy to setup

Reliable

Supports our sources (Postgres, GA, HubSpot, etc.)

Cost efficient (vs data engineer salary)

The first go-to product was Airbyte as a direct Fivetran alternative. I’ve quickly checked Airbyte pricing and realised that it’s going to be around half the price for the same usage!

The other alternative with an easy-to-setup CDC from PG was Google Datastream. It was recently released to a public version for Postgres, 1/8 (!!) of the Fivetran cost on the paper. In fact, it cost 1/20th of the Fivetran for the same volume of data (compression?)

Unfortunately, none of the solutions worked out of the box for us:

Airbyte uses different WAL plugin (

pgoutputinstead oftest_decoding). It meant that we had to change the settings on sources.We’re actively using TOAST columns in the source tables:

Airbyte failed on the initial sync due to large rows. Support team quickly fixed the issue though

Google Datastream has a limitation of 3 MB per row and will not sync larger rows (in fact it was around 5 MB according to our tests).

Google Datastream doesn’t support Array, Enum and user-defined data types (as of the date of this post)

Google Datastream only works for databases, which means that we’ll need a second solution for API sources

What if we create a Frankenstack?

Alternative solutions were so much cheaper. So we decided to investigate a creation of Frankenstack: Google Datastream + Fivetran / Airbyte:

Google Datastream for the supported tables/databases (without TOAST columns and tables with Array/Enum/UDT)

Airbyte or Fivetran for the rest (other tables + API sources).

And then the typical dilemma appeared:

We can combine different solutions to decrease the costs

However this would lead us to the data quality decrease:

Table references might not exist due to the difference in syncs

Different schema structure (eg.

_fivetran_deletedvsis_deletedwill confuse analysts)

Negotiations with Fivetran

Having investigated different alternatives we realized that the only option we had on a table for the next year is Fivetran, so we wanted to negotiate a better price.

Position we had:

We’re ready to pay for API sources (Hubspot, GA, etc.)

Cost per row synced for database sources is too high and alternatives are much cheaper, so we’re not ready to pay for it

Fivetran renewal team came up with a new offer which felt like data manipulation. They came up with a discount based on the source connector type and gave 50% discount on Postgres. In the end it appeared to be even more expensive than a regular offer, and was even more expensive per each row synced.

Aftermath and so-whats

Google Datastream is the cheapest no-code way to sync data from Postgres to Big Query (note that they might kill the product later, they often do)

It might be not an easy task to seamlessly migrate from the Fivetran to another solution. The devil is in the details, like TOAST columns.

Fivetran is extremely expensive in comparison with other tools on the market, but it does the job really well. Simple as it is. Test other tools to make your own decision

Even though we tested other alternatives, we made a decision to extend our Fivetran contract for another year:

We couldn't fully migrate from Fivetran in a short period of time → we had to keep it at least for some of the sources

Keeping it at least for some sources meant that we’ll face a Frankenstack and will have to support different tools

Cost of supporting different data tools (and pipelines) was not worth the costs saved, especially taking into account the logarithm-based pricing model of Fivetran

Even though we didn’t succeed the Fivetran migration from the first shot, we’ll do another attempt later this year.

If you’re going to describe Fivetran as “extremely expensive” you should disclose the number. You are paying a fraction of the cost of a single data engineer for the entire ingest side of your data stack.